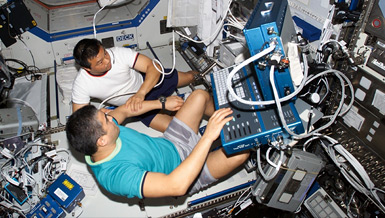

Spatial disorientation and navigation problems have been reported by astronauts operating in spacecraft with complex three-dimensional (3D) architecture. These problems can complicate responses to emergencies. In weightlessness, astronauts face various orientations and 3D navigation, but these challenges cannot be simulated in mockups on the ground.

NSBRI Postdoctoral Fellow Dr. Hirofumi Aoki has designed a virtual reality-based training method as a countermeasure to inflight spatial disorientation and 3D navigation problems. Subjects perform navigational exercises and emergency egress tasks in a virtual, realistic simulation of the ISS under normal and smoke-obstructed conditions. The results should define procedures for preflight spatial disorientation and navigation training and design standards for future spacecraft.