Orientation, navigation and spatial memory problems can affect an astronaut’s performance during missions. Dr. Charles M. Oman and colleagues are studying three-dimensional spatial memory and navigation with the aim of developing pre-flight and in-flight visual orientation training countermeasures to help astronauts quickly learn the three-dimensional layout of the International Space Station. Their research includes studies of humans and animals and employs virtual reality techniques, tumbling rooms and parabolic flight experiments.

Overview

Visual Orientation and Spatial Memory: Mechanisms and Countermeasures

Principal Investigator:

Charles M. Oman, Ph.D.

Organization:

Massachusetts Institute of Technology

Harvard-MIT Division of Health Sciences and Technology

Technical Summary

Specific Aims

- To quantify how environmental geometric frame and object polarity cues determine human visual orientation in order to support engineering and design of spacecraft work areas.

- To develop reliable means for quantifying head-movement-contingent oscillopsia.

- To determine whether pre-flight VR techniques can improve astronaut 3D spatial memory and navigation abilities by reducing direction vertigo and teaching International Space Station (ISS) configuration and emergency egress routes.

- To improve astronaut teleoperation performance by taking into account the mental object rotation and perspective taking abilities of individuals while training and during operations.

- Visual frame and polarity effects in tilted rooms and in an immersive visual virtual environment (IVY), examining the effect of room aspect ratio and observer field of view;

- The perceptual upright as measured using a new OCHART method ("p" vs. "d" letter recognition) and analyzed results using a linear vector summation model; and

- Quantified oscillopsia during Coriolis stimulation using a new visual feedback technique.

Massachusetts Institute of Technology (MIT) completed a series of four "relearning, reoriented spacecraft modules" experiments, designed to simulate the training experience of astronauts who learn the interiors of individual spacecraft modules in a locally upright configuration in ground simulators, but who have to make spatial judgments when the modules are assembled in a different flight configuration. We showed that subjects remember each module in a visually upright, canonical orientation, and therefore had to make mental rotations in order to inter-relate the two modules. This year MIT tested different flight configurations and found that performance was best when visual verticals were co-aligned, intermediate for 180 degree orientations, and worst when modules were rotated through 90 degrees. Our results account for the visualization difficulties and disorientation previously reported by Apollo, Mir and ISS astronauts when transiting certain areas of their spacecraft. The result could be easily translated into a design standard for space stations and docked vehicle operations.

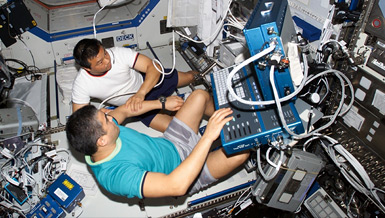

MIT also completed two ISS emergency egress training studies of 3D, 6 degree of freedom navigation performance, quantifying the effect of training in a locally versus globally upright configuration, with and without smoke obscuration. Most subjects learned quickly, but performance correlated with individual 3D mental rotation and perspective taking skills. This study, led by Dr. Aoki, won the 2007 Young Investigator Award from the Aerospace Medical Association's Space Medicine Branch. This year we also compared performance of subjects trained using with a non-immersive laptop display with a similar sized group tested last year using an immersive display. Although immersive displays better simulate the vestibular and haptic cues required to orient spatially, our subjects performed almost as well using the laptop. Finally, as planned, MIT completed development of a space telerobotic training simulator and showed that individual mental rotation and perspective taking abilities influence performance during training.

Results of the York University and MIT studies have been presented at several international meetings and full manuscripts have been published or are currently in submission. Dr. Oman also published a review article on visual orientation in microgravity which summarizes our research in a broader context.